Let’s keep this super-simple: the devil is in the details in any disclosure policy. If you go to the OpenAI ChatGPT FAQ, there are a few points that should raise the eyebrows of any security engineer trying to protect company data and access. To that purpose, at the end of this blog, you’ll find three ways to opt out of ChatGPT data sharing that will help you and your CISO sleep better at night.

Let’s assume your company has allowed ChatGPT for employee use. Now, let’s take a look at the terms of use for ChatGPT:

Will you use my conversations for training?

- Yes. Your conversations may be reviewed by our AI trainers to improve our systems.

From their privacy policy:

We’ll retain your Personal Information for only as long as we need in order to provide our Service to you, or for other legitimate business purposes such as resolving disputes, safety and security reasons, or complying with our legal obligations. How long we retain Personal Information will depend on a number of factors, such as the amount, nature, and sensitivity of the information, the potential risk of harm from unauthorized use or disclosure, our purpose for processing the information, and any legal requirements.

Did you notice the retention period of the data seems a bit vague? To be reductionist: any conversation you have (or the corporate you, through your organization’s SSO) gets to be retained in perpetuity by OpenAI. So all of those questions and shortcuts AI has helped with could easily come back to bite your company in the future, depending on what you told ChatGPT in the first place.

Here are a few thoughts for staring at your ceiling at midnight:

- Attackers probe ChatGPT for target data like passwords, APIs, or other intellectual property. Crafty prompters know how to get the AI to relinquish this data, and yes, they’re likely trying to get it on your company for any vulnerabilities they can exploit.

- Incorrect data is difficult to purge (including data on people), leading on Italy’s ChatGPT ban – so enter information at your own risk.

- Be careful what you give the world – you don’t want your competition finding code, schematics, ideas, or plans from your organization. Samsung recently found this out when one employee asked ChatGPT to check confidential database source code for errors, another employee generated code optimization, and a third fed a company video meeting into ChatGPT to summarize meeting minutes. The data shared by all three employees came back to hurt the company. Let’s not put company information into an intelligent system that’s built to learn, right?

Three ways to opt out of ChatGPT data sharing

There are three ways to opt out of ChatGPT data sharing that can keep company data incognito:

- Use the API, whose terms clearly state: “We do not use Content that you provide to or receive from our API (“API Content”) to develop or improve our Services.” It may be less convenient (especially on mobile), but it’s a step worth taking…

- Set up a secure instance through Microsoft Azure.

- Submit this opt-out form for your company.

-

- Enter your email.

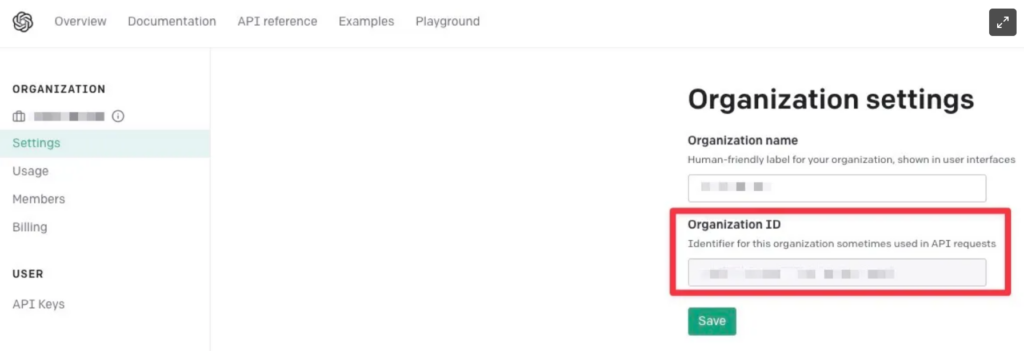

- Find your OpenAI Organization ID [below]

- Go to this page and log in.

- Select the “Settings” tab and find your Organization name and ID per below:

- Copy the Organization ID and name.

- Paste them into the form described in step 1.

- Hit “Submit.”

Speaking of reading the fine print, have you wondered what the Banyan privacy policies are? We’re glad you’re curious and welcome you to read further.